这讲会提供示例代码!

1. “迷宫寻宝” 之任务发布

已知迷宫地图的覆盖范围(10*10m),其中某处藏有一处明显标记的宝藏(红色圆球),机器人在未知环境的情况下从起点出发,自主寻找宝藏,寻得宝藏之后需返回起点,任务完成。

- 地图预先使用Gazebo创建完成,并在Gazebo完成所有任务;

- 机器人在起点运动时开始计时,寻得宝藏回到起点后计时终止,须在5min内完成任务;

- 机器人搭载的传感器没有限制;

- 机器人接近宝藏1m范围内即认为获取宝藏;

- 机器人获取宝藏后,需通过语音播报状态信息;

- 宝藏的位置不固定,允许动态调整。

迷宫环境可以使用Building Editor建立,二维图中有长度信息。

2. “迷宫寻宝” 之任务分析

- 机器人建模仿真

- 图像识别

- 语音交互

- 科大讯飞 SDK

- 自主导航

- SLAM

- ROS通信机制

- 系统设计与集成

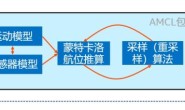

需要通过图像识别object_detect寻找到宝藏的位置,通过目标位置调整机器人的位置,使得机器人向它运动(视觉伺服),通过exploring_maze导航、通过gmapping建图。将导航目标点发送给move_base进行避障自主导航。tts_subscribe是之前提到过的科大讯飞开放平台提供的语音合成例程,这里用来发布寻找到目标物体的语音。

需要通过图像识别object_detect寻找到宝藏的位置,通过目标位置调整机器人的位置,使得机器人向它运动(视觉伺服),通过exploring_maze导航、通过gmapping建图。将导航目标点发送给move_base进行避障自主导航。tts_subscribe是之前提到过的科大讯飞开放平台提供的语音合成例程,这里用来发布寻找到目标物体的语音。

3. “迷宫寻宝” 之具体实现

①机器人建模

为了完成上述任务,给机器人搭建仿真环境时,添加camera、laser。

mbot_base_gazebo.xacro

mbot_base_gazebo.xacro

<?xml version="1.0"?>

<robot name="mbot" xmlns:xacro="http://www.ros.org/wiki/xacro">

<!-- PROPERTY LIST -->

<xacro:property name="M_PI" value="3.1415926"/>

<xacro:property name="base_mass" value="1" />

<xacro:property name="base_radius" value="0.20"/>

<xacro:property name="base_length" value="0.16"/>

<xacro:property name="wheel_mass" value="0.2" />

<xacro:property name="wheel_radius" value="0.06"/>

<xacro:property name="wheel_length" value="0.025"/>

<xacro:property name="wheel_joint_y" value="0.19"/>

<xacro:property name="wheel_joint_z" value="0.05"/>

<xacro:property name="caster_mass" value="0.2" />

<xacro:property name="caster_radius" value="0.015"/> <!-- wheel_radius - ( base_length/2 - wheel_joint_z) -->

<xacro:property name="caster_joint_x" value="0.18"/>

<!-- Defining the colors used in this robot -->

<material name="yellow">

<color rgba="1 0.4 0 1"/>

</material>

<material name="black">

<color rgba="0 0 0 0.95"/>

</material>

<material name="gray">

<color rgba="0.75 0.75 0.75 1"/>

</material>

<!-- Macro for inertia matrix -->

<xacro:macro name="sphere_inertial_matrix" params="m r">

<inertial>

<mass value="${m}" />

<inertia ixx="${2*m*r*r/5}" ixy="0" ixz="0"

iyy="${2*m*r*r/5}" iyz="0"

izz="${2*m*r*r/5}" />

</inertial>

</xacro:macro>

<xacro:macro name="cylinder_inertial_matrix" params="m r h">

<inertial>

<mass value="${m}" />

<inertia ixx="${m*(3*r*r+h*h)/12}" ixy="0" ixz="0"

iyy="${m*(3*r*r+h*h)/12}" iyz="0"

izz="${m*r*r/2}" />

</inertial>

</xacro:macro>

<!-- Macro for robot wheel -->

<xacro:macro name="wheel" params="prefix reflect">

<joint name="${prefix}_wheel_joint" type="continuous">

<origin xyz="0 ${reflect*wheel_joint_y} ${-wheel_joint_z}" rpy="0 0 0"/>

<parent link="base_link"/>

<child link="${prefix}_wheel_link"/>

<axis xyz="0 1 0"/>

</joint>

<link name="${prefix}_wheel_link">

<visual>

<origin xyz="0 0 0" rpy="${M_PI/2} 0 0" />

<geometry>

<cylinder radius="${wheel_radius}" length="${wheel_length}"/>

</geometry>

<material name="gray" />

</visual>

<collision>

<origin xyz="0 0 0" rpy="${M_PI/2} 0 0" />

<geometry>

<cylinder radius="${wheel_radius}" length="${wheel_length}"/>

</geometry>

</collision>

<cylinder_inertial_matrix m="${wheel_mass}" r="${wheel_radius}" h="${wheel_length}" />

</link>

<gazebo reference="${prefix}_wheel_link">

<material>Gazebo/Gray</material>

</gazebo>

<!-- Transmission is important to link the joints and the controller -->

<transmission name="${prefix}_wheel_joint_trans">

<type>transmission_interface/SimpleTransmission</type>

<joint name="${prefix}_wheel_joint" >

<hardwareInterface>hardware_interface/VelocityJointInterface</hardwareInterface>

</joint>

<actuator name="${prefix}_wheel_joint_motor">

<hardwareInterface>hardware_interface/VelocityJointInterface</hardwareInterface>

<mechanicalReduction>1</mechanicalReduction>

</actuator>

</transmission>

</xacro:macro>

<!-- Macro for robot caster -->

<xacro:macro name="caster" params="prefix reflect">

<joint name="${prefix}_caster_joint" type="continuous">

<origin xyz="${reflect*caster_joint_x} 0 ${-(base_length/2 + caster_radius)}" rpy="0 0 0"/>

<parent link="base_link"/>

<child link="${prefix}_caster_link"/>

<axis xyz="0 1 0"/>

</joint>

<link name="${prefix}_caster_link">

<visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<sphere radius="${caster_radius}" />

</geometry>

<material name="black" />

</visual>

<collision>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<sphere radius="${caster_radius}" />

</geometry>

</collision>

<sphere_inertial_matrix m="${caster_mass}" r="${caster_radius}" />

</link>

<gazebo reference="${prefix}_caster_link">

<material>Gazebo/Black</material>

</gazebo>

</xacro:macro>

<xacro:macro name="mbot_base_gazebo">

<link name="base_footprint">

<visual>

<origin xyz="0 0 0" rpy="0 0 0" />

<geometry>

<box size="0.001 0.001 0.001" />

</geometry>

</visual>

</link>

<gazebo reference="base_footprint">

<turnGravityOff>false</turnGravityOff>

</gazebo>

<joint name="base_footprint_joint" type="fixed">

<origin xyz="0 0 ${base_length/2 + caster_radius*2}" rpy="0 0 0" />

<parent link="base_footprint"/>

<child link="base_link" />

</joint>

<link name="base_link">

<visual>

<origin xyz=" 0 0 0" rpy="0 0 0" />

<geometry>

<cylinder length="${base_length}" radius="${base_radius}"/>

</geometry>

<material name="yellow" />

</visual>

<collision>

<origin xyz=" 0 0 0" rpy="0 0 0" />

<geometry>

<cylinder length="${base_length}" radius="${base_radius}"/>

</geometry>

</collision>

<cylinder_inertial_matrix m="${base_mass}" r="${base_radius}" h="${base_length}" />

</link>

<gazebo reference="base_link">

<material>Gazebo/Blue</material>

</gazebo>

<wheel prefix="left" reflect="1"/>

<wheel prefix="right" reflect="-1"/>

<caster prefix="front" reflect="-1"/>

<caster prefix="back" reflect="1"/>

<!-- controller -->

<gazebo>

<plugin name="differential_drive_controller"

filename="libgazebo_ros_diff_drive.so">

<rosDebugLevel>Debug</rosDebugLevel>

<publishWheelTF>true</publishWheelTF>

<robotNamespace>/</robotNamespace>

<publishTf>1</publishTf>

<publishWheelJointState>true</publishWheelJointState>

<alwaysOn>true</alwaysOn>

<updateRate>100.0</updateRate>

<legacyMode>true</legacyMode>

<leftJoint>left_wheel_joint</leftJoint>

<rightJoint>right_wheel_joint</rightJoint>

<wheelSeparation>${wheel_joint_y*2}</wheelSeparation>

<wheelDiameter>${2*wheel_radius}</wheelDiameter>

<broadcastTF>1</broadcastTF>

<wheelTorque>30</wheelTorque>

<wheelAcceleration>1.8</wheelAcceleration>

<commandTopic>cmd_vel</commandTopic>

<odometryFrame>odom</odometryFrame>

<odometryTopic>odom</odometryTopic>

<robotBaseFrame>base_footprint</robotBaseFrame>

</plugin>

</gazebo>

</xacro:macro>

</robot>

mbot_with_camera_laser_gazebo.xacro

<?xml version="1.0"?>

<robot name="arm" xmlns:xacro="http://www.ros.org/wiki/xacro">

<xacro:include filename="$(find mbot_description)/urdf/mbot_base_gazebo.xacro" />

<xacro:include filename="$(find mbot_description)/urdf/sensors/camera_gazebo.xacro" />

<xacro:include filename="$(find mbot_description)/urdf/sensors/lidar_gazebo.xacro" />

<xacro:property name="camera_offset_x" value="0.17" />

<xacro:property name="camera_offset_y" value="0" />

<xacro:property name="camera_offset_z" value="0.10" />

<xacro:property name="pillar_length" value="0.1" />

<xacro:property name="pillar_radius" value="0.01" />

<xacro:property name="pillar_mass" value="0.1" />

<xacro:property name="lidar_offset_x" value="0" />

<xacro:property name="lidar_offset_y" value="0" />

<xacro:property name="lidar_offset_z" value="0.07" />

<!-- Camera -->

<joint name="camera_joint" type="fixed">

<origin xyz="${camera_offset_x} ${camera_offset_y} ${camera_offset_z}" rpy="0 0 0" />

<parent link="base_link"/>

<child link="camera_link"/>

</joint>

<xacro:usb_camera prefix="camera"/>

<link name="pillar_link">

<visual>

<origin xyz="0 0 0" rpy="0 0 0" />

<geometry>

<cylinder length="${pillar_length}" radius="${pillar_radius}"/>

</geometry>

<material name="gray" />

</visual>

<collision>

<origin xyz="0 0 0" rpy="0 0 0" />

<geometry>

<cylinder length="${pillar_length}" radius="${pillar_radius}"/>

</geometry>

</collision>

<cylinder_inertial_matrix m="${pillar_mass}" r="${pillar_radius}" h="${pillar_length}" />

</link>

<joint name="pillar_joint" type="fixed">

<origin xyz="0 0 0.10" rpy="0 0 0" />

<parent link="base_link"/>

<child link="pillar_link"/>

</joint>

<!-- lidar -->

<joint name="lidar_joint" type="fixed">

<origin xyz="${lidar_offset_x} ${lidar_offset_y} ${lidar_offset_z}" rpy="0 0 0" />

<parent link="pillar_link"/>

<child link="laser_link"/>

</joint>

<xacro:rplidar prefix="laser"/>

<mbot_base_gazebo/>

</robot>

注意:camera_gazebo.xacro和lidar_gazebo.xacro和ROS理论与实践(以移动机器人为例)连载(五) ——构建机器人仿真平台中提到的相同,可以自行修改参数。 这里就不放.launch文件了,很简单,相信看到这里的童鞋都知道怎么写了。前面的课程 ROS理论与实践(以移动机器人为例)连载(五) ——构建机器人仿真平台 也有描述。

②图像处理

这里使用对颜色进行识别,因为目标物体和背景颜色差距很大。 object_detect.py

这里使用对颜色进行识别,因为目标物体和背景颜色差距很大。 object_detect.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

from cv_bridge import CvBridge, CvBridgeError

from sensor_msgs.msg import Image

import numpy as np

from math import *

from geometry_msgs.msg import Pose

BLUE_LOW = 0

BLUE_HIGH = 20

GREEN_LOW = 20

GREEN_HIGH = 60

RED_LOW = 80

RED_HIGH = 150

class image_converter:

def __init__(self):

# 创建cv_bridge,声明图像的发布者和订阅者

self.bridge=CvBridge() #ROS图像和OpenCV图像信息的转换

self.image_sub=rospy.Subscriber("/camera/image_raw", Image, self.callback) #订阅Image,Camera的话题

self.image_pub=rospy.Publisher("object_detect_image", Image, queue_size=1) #发布识别结果

self.target_pub=rospy.Publisher("object_detect_target", Pose, queue_size=1) #发布target的Pose信息

def callback(self,data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8") #将ROS中拿到的数据转换成OpenCV能够使用的数据

except CvBridgeError as e:

print e

# define the list of boundaries in BGR

boundaries = [([BLUE_LOW, GREEN_LOW, RED_LOW], [BLUE_HIGH, GREEN_HIGH, RED_HIGH])] #识别颜色的范围值BGR

# loop over the boundaries

# print(boundaries)

for (lower, upper) in boundaries:

# create NumPy arrays from the boundaries

lower = np.array(lower, dtype = "uint8")

upper = np.array(upper, dtype = "uint8")

# find the colors within the specified boundaries and apply the mask

mask = cv2.inRange(cv_image, lower, upper)

output = cv2.bitwise_and(cv_image, cv_image, mask = mask)

cvImg = cv2.cvtColor(output, 6) #cv2.COLOR_BGR2GRAY

npImg = np.asarray( cvImg )

thresh = cv2.threshold(npImg, 1, 255, cv2.THRESH_BINARY)[1]

# find contours in the thresholded image

img, cnts, hierarchy = cv2.findContours(thresh, cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

#cnts = cnts[0]

# loop over the contours

for c in cnts:

# compute the center of the contour

M = cv2.moments(c)

if int(M["m00"]) not in range(20000, 250000): #M["m00"]是面积,如果面积不达标就不认为是宝藏

continue

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

cv2.drawContours(cv_image, [c], -1, (0, 0, 255), 2)

cv2.circle(cv_image, (cX, cY), 1, (0, 0, 255), -1)

objPose = Pose()

objPose.position.x = cX;

objPose.position.y = cY;

objPose.position.z = M["m00"];

self.target_pub.publish(objPose)

# 显示Opencv格式的图像

# cv2.imshow("Image window", cv_image)

# cv2.waitKey(3)

# 再将opencv格式额数据转换成ros image格式的数据发布

try:

self.image_pub.publish(self.bridge.cv2_to_imgmsg(cv_image, "bgr8"))

except CvBridgeError as e:

print e

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("object_detect")

rospy.loginfo("Starting detect object")

image_converter()

rospy.spin()

except KeyboardInterrupt:

print "Shutting down object_detect node."

cv2.destroyAllWindows()

那么有一个问题上面设置的检测颜色范围由于在Gazebo内光影的问题,实际每个人的是不相同的该如何去测算自己模型中的RGB区间呢? 我们可以把检测宝藏在Gazebo仿真环境中和机器人摄像头放在一起,保存下rqt_image_view中的显示图片。Gazebo仿真环境上面的模型构建中已经提到,有缺省的在之前的教程中也必然提到了。 这是保存好的图片↓

然后丢到这个程序里↓ hsv_test.py

然后丢到这个程序里↓ hsv_test.py

# -*- coding:utf-8 -*-

import cv2

img = cv2.imread('image.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

def mouse_click(event, x, y, flags, para):

if event == cv2.EVENT_LBUTTONDOWN: # 左边鼠标点击

#print('PIX:', x, y)

print("BGR:", img[y, x])

#print("GRAY:", gray[y, x])

#print("HSV:", hsv[y, x])

if __name__ == '__main__':

cv2.namedWindow("img")

cv2.setMouseCallback("img", mouse_click)

while True:

cv2.imshow('img', img)

if cv2.waitKey() == ord('q'):

break

cv2.destroyAllWindows()

检测结果↓

检测结果↓

运行成功后可以使用rostopic echo /object_detect_target打印出发布的目标位置的圆心信息,( x , y ) (x,y)(x,y)当然是圆心坐标了,z zz是这个目标在Camera二维图像中的面积。

运行成功后可以使用rostopic echo /object_detect_target打印出发布的目标位置的圆心信息,( x , y ) (x,y)(x,y)当然是圆心坐标了,z zz是这个目标在Camera二维图像中的面积。

③视觉伺服

示例代码 move_to_target.cpp↓ 代码中使用宏定义,定义了机器人的三种状态以及因为z zz是二维图像中目标的面积,那么定义了GET_TARGET_SIZE用来确定是否到达限定距离。 同时,在机器人获取宝藏后,发布话题/voiceWords通过tts_subscribe.cpp也就是之前在机器语音那节使用过的由科大讯飞开放平台提供的语音合成代码。用来发布当前状态的语音信息。

#include<math.h>

#include <ros/ros.h>

#include <geometry_msgs/Twist.h>

#include <geometry_msgs/Pose.h>

#include <std_msgs/Int8.h>

#include <std_msgs/String.h>

#define STATUS_EXPLORING (0)

#define STATUS_CLOSE_TARGET (1)

#define STATUS_GO_HOME (2)

#define GET_TARGET_SIZE (90000)

ros::Publisher vel_pub;

ros::Publisher cmd_pub;

ros::Publisher voice_pub;

int status_flag = STATUS_EXPLORING;

// 接收到订阅的消息后,会进入消息回调函数

void poseCallback(const geometry_msgs::Pose::ConstPtr& msg)

{

// 将接收到的消息打印出来

ROS_INFO("Target pose: x:%0.6f, y:%0.6f, z:%0.6f", msg->position.x, msg->position.y, msg->position.z);

if(status_flag==STATUS_EXPLORING)

{

status_flag=STATUS_CLOSE_TARGET;

std_msgs::Int8 cmd;

cmd.data=STATUS_CLOSE_TARGET;

cmd_pub.publish(cmd);

std_msgs::String msg;

msg.data="发现宝藏,向宝藏进发";

voice_pub.publish(msg);

}

else if(status_flag==STATUS_CLOSE_TARGET && msg->position.z > GET_TARGET_SIZE)

{

status_flag=STATUS_GO_HOME;

std_msgs::Int8 cmd;

cmd.data=STATUS_GO_HOME;

cmd_pub.publish(cmd);

std_msgs::String msg;

msg.data="拿到宝藏,撤退";

voice_pub.publish(msg);

}

else if(status_flag==STATUS_CLOSE_TARGET) //通过目标位置修改运动速度

{

//初始化Twist类型的消息

geometry_msgs::Twist vel_msg;

vel_msg.linear.x = (150000 - msg->position.z) / 100000 * 0.3;

vel_msg.angular.z = (640 - msg->posetion.x) / 640 * 0.3;

//若目标中心点较图片x轴中心640偏右,那么按照计算公式angular.z是负值,负值机器人顺时针旋转即右转,使得中心点挪向中心;反之亦然

//发布消息

vel_pub.publish(vel_msg);

ROS_INFO("Publish velocity command[%0.2f m/s, %0.2f rad/s]", vel_msg.linear.x, vel_msg.angular.z);

}

}

int main(int argc, char **argv)

{

// ROS节点初始化

ros::init(argc, argv, "move_to_target");

// 创建节点句柄

ros::NodeHandle n;

// 一个节点可以根据需要设定若干个订阅或者发布者

// 创建一个Subscriber,订阅名为/turtle1/pose的topic,注册回调函数poseCallback

ros::Subscriber pose_sub = n.subscribe("/object_detect_pose", 10, poseCallback);

// 创建一个Publisher,发布名为cmd_vel的topic,消息类型为geometry_msgs::Twist,队列长度10

vel_pub = n.advertise<geometry_msgs::Twist>("/cmd_vel", 10);

// 创建一个Publisher,发布名为cmd_vel的topic,消息类型为std_msgs::Int8,队列长度10

cmd_pub = n.advertise<std_msgs::Int8>("/exploring_cmd", 10);

// 发布语音输出内容

voice_pub = n.advertise<std_msgs::String>("voiceWords", 1000);

// 循环等待回调函数

ros::spin();

return 0;

}

上述代码中回调函数poseCallback内的条件语句属于之后集成系统的部分,可以先忽略,只关注最后一段else if即可。

④导航&SLAM

联系上一个代码,如果接收到/object_detect_pose即检测到目标宝藏时,会调回回调函数,回调函数中初始化了status_flag这个变量为STATUS_CLOSE_TARGET,那么跳入视觉伺服,进行机器人检测到宝藏后的最后移动;倘若既满足STATUS_CLOSE_TARGET,又满足面积比预设的面积阈值大,也就是到达了能够抓取宝藏的距离,status_flag这个变量被赋值为STATUS_GO_HOME,也就是fetch the treasure,那么在下面↓这个代码中就可以看到设定的MoveBaseGoal是初始原点;另一种status_flag变量形式是STATUS_EXPLORING,就是未找到目标物体,但是仍在建图导航+寻找中,那么随机导航目标点,同时进行物体的检测。当检测到物体后就跳转到上面说过的,取消self.move_base.cancel_goal()随机导航,转向视觉伺服。 下面是导航的示例代码↓ exploring_maze.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import roslib;

import rospy

import actionlib

import random

from actionlib_msgs.msg import *

from geometry_msgs.msg import Pose, PoseWithCovarianceStamped, Point, Quaternion, Twist

from move_base_msgs.msg import MoveBaseAction, MoveBaseGoal

from std_msgs.msg import Int8

STATUS_EXPLORING = 0

STATUS_CLOSE_TARGET = 1

STATUS_GO_HOME = 2

class ExploringMaze():

def __init__(self):

rospy.init_node('exploring_maze', anonymous=True)

rospy.on_shutdown(self.shutdown) # shutdown是用来在CTRL+C取消后,给机器人发布一个0速度的指令

# 在每个目标位置暂停的时间 (单位:s)

self.rest_time = rospy.get_param("~rest_time", 0.5)

# 发布控制机器人的消息

self.cmd_vel_pub = rospy.Publisher('cmd_vel', Twist, queue_size=5)

# 创建一个Subscriber,订阅/exploring_cmd

rospy.Subscriber("/exploring_cmd", Int8, self.cmdCallback)

# 订阅move_base服务器的消息

self.move_base = actionlib.SimpleActionClient("move_base", MoveBaseAction) # 订阅move_base服务器,相当于这是个客户端用来给导航发布Goal指令的

rospy.loginfo("Waiting for move_base action server...")

# 60s等待时间限制

self.move_base.wait_for_server(rospy.Duration(60))

rospy.loginfo("Connected to move base server")

# 保存运行时间的变量

start_time = rospy.Time.now()

running_time = 0

rospy.loginfo("Starting exploring maze")

# 初始位置

start_location = Pose(Point(0, 0, 0), Quaternion(0.000, 0.000, 0.709016873598, 0.705191515089)) # 设定初始位置实际上就是零位置HOME

# 命令初值

self.exploring_cmd = 0

# 开始主循环,随机导航

while not rospy.is_shutdown():

# 设定下一个随机目标点

self.goal = MoveBaseGoal()

self.goal.target_pose.pose = start_location # 先回到初始点

self.goal.target_pose.header.frame_id = 'map'

self.goal.target_pose.header.stamp = rospy.Time.now() # 把当前时间发布

if self.exploring_cmd is STATUS_EXPLORING: # 探索,随机点导航

self.goal.target_pose.pose.position.x = random.randint(0,8)

self.goal.target_pose.pose.position.y = random.randint(0,9)

elif self.exploring_cmd is STATUS_CLOSE_TARGET: # 视觉伺服

rospy.sleep(0.1)

elif self.exploring_cmd is STATUS_GO_HOME: # 返航

self.goal.target_pose.pose.position.x = 0

self.goal.target_pose.pose.position.y = 0

# 让用户知道下一个位置

rospy.loginfo("Going to:" + str(self.goal.target_pose.pose))

# 向下一个位置进发

self.move_base.send_goal(self.goal)

# 五分钟时间限制

finished_within_time = self.move_base.wait_for_result(rospy.Duration(300))

# 查看是否成功到达

if not finished_within_time:

self.move_base.cancel_goal()

rospy.loginfo("Timed out achieving goal")

else:

state = self.move_base.get_state()

if state == GoalStatus.SUCCEEDED:

rospy.loginfo("Goal succeeded!")

else:

rospy.loginfo("Goal failed!")

# 运行所用时间

running_time = rospy.Time.now() - start_time

running_time = running_time.secs / 60.0

# 输出本次导航的所有信息

rospy.loginfo("current time:" + str(trunc(running_time,1))+"min")

self.shutdown()

def cmdCallback(self, msg):

rospy.loginfo("Receive exploring cmd : %d", msg.data)

self.exploring_cmd = msg.data

if self.exploring_cmd is STATUS_CLOSE_TARGET:

rospy.loginfo("Stopping the robot...")

self.move_base.cancel_goal()

def shutdown(self):

rospy.loginfo("Stopping the robot...")

self.move_base.cancel_goal()

rospy.sleep(2)

self.cmd_vel_pub.publish(Twist())

rospy.sleep(1)

def trunc(f, n):

slen = len('%.*f' % (n, f))

return float(str(f)[:slen])

if __name__ == '__main__':

try:

ExploringMaze()

rospy.spin()

# spin一直在订阅exploring_cmd如果flag有变化,那么机器人也在导航和视觉伺服直接跳换

except rospy.ROSInterruptException:

rospy.loginfo("Exploring maze finished.")

大体上的介绍和代码就是这样的,三个.launch文件如下:

- mbot_maze_gazebo.launch 也就是仿真模型

<launch>

<!-- 设置launch文件的参数 -->

<arg name="world_name" value="$(find mbot_gazebo)/worlds/maze.world"/>

<arg name="paused" default="false"/>

<arg name="use_sim_time" default="true"/>

<arg name="gui" default="true"/>

<arg name="headless" default="false"/>

<arg name="debug" default="false"/>

<!-- 运行gazebo仿真环境 -->

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<arg name="world_name" value="$(arg world_name)" />

<arg name="debug" value="$(arg debug)" />

<arg name="gui" value="$(arg gui)" />

<arg name="paused" value="$(arg paused)"/>

<arg name="use_sim_time" value="$(arg use_sim_time)"/>

<arg name="headless" value="$(arg headless)"/>

</include>

<!-- 加载机器人模型描述参数 -->

<param name="robot_description" command="$(find xacro)/xacro --inorder '$(find mbot_description)/urdf/mbot_with_camera_laser_gazebo.xacro'" />

<!-- 运行joint_state_publisher节点,发布机器人的关节状态 -->

<node name="joint_state_publisher" pkg="joint_state_publisher" type="joint_state_publisher" ></node>

<!-- 运行robot_state_publisher节点,发布tf -->

<node name="robot_state_publisher" pkg="robot_state_publisher" type="robot_state_publisher" output="screen" >

<param name="publish_frequency" type="double" value="50.0" />

</node>

<!-- 在gazebo中加载机器人模型-->

<node name="urdf_spawner" pkg="gazebo_ros" type="spawn_model" respawn="false" output="screen"

args="-urdf -model mbot -param robot_description"/>

</launch>

- exploring_slam_demo.launch 导航和建图,并根据exploring_cmd进行系统上与视觉伺服控制的替换

<launch>

<include file="$(find mbot_navigation)/launch/gmapping.launch"/>

<!-- 运行move_base节点 -->

<include file="$(find mbot_navigation)/launch/move_base.launch" />

<!-- 运行rviz -->

<node pkg="rviz" type="rviz" name="rviz" args="-d $(find mbot_navigation)/rviz/nav.rviz"/>

</launch>

这个包含了 gmapping.launch 和 move_base.launch 上一讲我们都提到过,可以查看ROS理论与实践(以移动机器人为例)连载(九) ——机器人自主导航。

- find_target.launch 寻找宝藏

<launch>

<node name="exploring_maze" type="exploring_maze.py" pkg="mbot_navigation" output="screen" />

<node name="object_detect" type="object_detect.py" pkg="mbot_vision" output="screen"/>

<node name="move_to_target" type="move_to_target" pkg="mbot_vision" output="screen" />

<node name="tts_subscribe" type="tts_subscribe" pkg="mbot_voice" output="screen" />

</launch>

大致的就这样结束了,当然在已知map的情况下不使用随机目标点导航,而是有目的的确定几个空间下的关键点,对整个任务完成效率更高。 想要如何获得地图中某个点的坐标和四元数,可以使用rostopic echo /move_base/goal来打印出目标点的坐标,使用2D Navigation Go的GUI进行设定目标点。